Azure Data Factory is a cloud-based Microsoft device that gathers crude business information and changes it into usable data. There is a significant interest in Azure Data Factory Engineers in the business. Hence, cracking its interview needs a bit of homework. This blog lists the most common Azure Data Factory Interview Questions asked during Data Engineers’ job interviews.

Whether you’re a beginner or an experienced professional, these questions will help you prepare for your data engineering job interview:

Azure Data Factory Interview Questions And Answers for Beginners

1. Define Azure Data Factory.

Answer: Azure Data Factory (ADF) is a cloud-based data integration service that allows you to create, schedule, and manage data pipelines. It enables data movement and transformation from various sources to destinations.

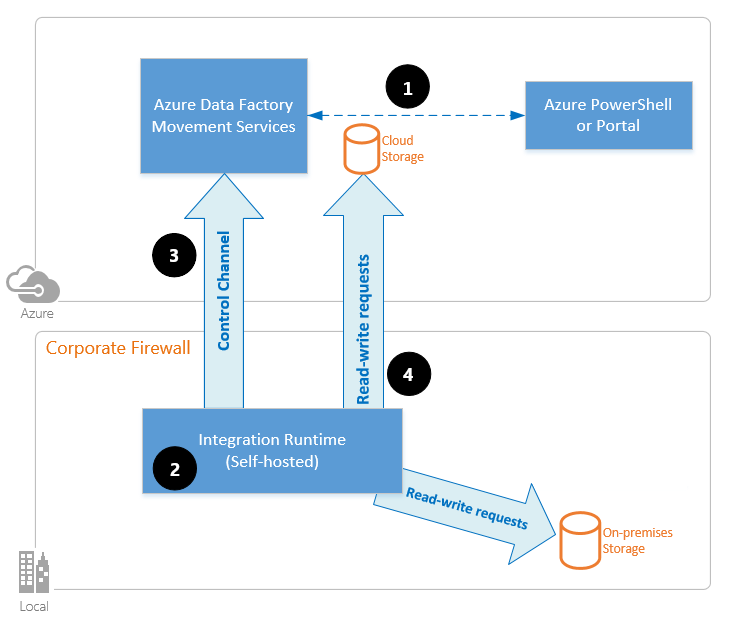

Answer: Integration Runtime (IR) is a computing infrastructure used by Azure Data Factory Developers to provide data integration capabilities across different network environments. It facilitates data movement between on-premises and cloud data stores.

2. Explain what Integration Runtime is in Azure Data Factory

Answer: Integration Runtime (IR) is a compute infrastructure used by Azure Data Factory to provide data integration capabilities across different network environments. It facilitates data movement between on-premises and cloud data stores.

3. Define what Self-Hosted Integration and Azure SSIS Integration Runtimes are.

Self-Hosted Integration Runtime: It allows data movement between on-premises data stores and Azure services. You install and manage it on your own infrastructure.

Azure SSIS Integration Runtime: It’s a managed service for running SQL Server Integration Services (SSIS) packages in Azure. It provides scalability and flexibility for ETL processes.

4. Differentiate Azure Data Lake from Azure Data Warehouse

Answer:

- Azure Data Lake: It’s a large-scale data lake storage service for big data analytics. It stores unstructured and semi-structured data.

- Azure Data Warehouse (Synapse Analytics): It’s a fully managed data warehouse service for structured data. It’s optimized for analytical workloads.

5. Walk us through how you normally create an ETL process in Azure Data Factory.

Answer: An ETL (Extract, Transform, Load) process in ADF involves:

- Extract: Retrieve data from various sources (files, databases, APIs).

- Transform: Apply data transformations (filtering, aggregations, joins).

- Load: Load the transformed data into target destinations (Azure SQL Database, Data Lake, etc.).

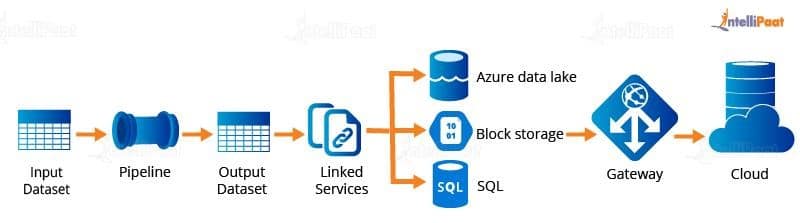

6. What are the top-level concepts of Azure Data Factory?

Answer: Key concepts include linked services, datasets, pipelines, activities, triggers, and integration runtimes.

7. What are the security levels in ADLS Gen2?

Answer: ADLS Gen2 (Azure Data Lake Storage Gen2) supports three security levels: Blob-level, File-level, and Directory-level.

Top Azure Data Factory Interview Questions 2024- Scenario-Based

8. You need to copy data from an on-premises SQL Server database to Azure SQL Database. How would you design this data pipeline in ADF?

Answer: Use a pipeline with a source dataset pointing to the on-premises SQL Server and a sink dataset pointing to the Azure SQL Database. Configure the appropriate integration runtime for the on-premises connection.

9. How would you handle incremental data loads in ADF?

Answer: Use watermark-based logic or change tracking to identify new or modified records since the last load. Implement this logic in your data flow or pipeline.

10. Differentiate between a Dataset and a Linked Service in Azure Data Factory.

Answer:

- Dataset: Represents the data structure within a data store (e.g., a table in a database or a file in a storage account).

- Linked Service: Defines the connection information to external data stores (e.g., Azure SQL Database, Azure Blob Storage).

11. How would you handle incremental data loads in Azure Data Factory?

Answer:

To handle incremental loads, use watermark-based logic or change tracking. Identify new or modified records since the last load and implement this logic in your data flow or pipeline.

12. Explain the concept of partitioning in Azure Data Factory.

Answer:

Partitioning involves dividing large datasets into smaller chunks (partitions) for parallel processing. ADF can partition data during data movement or transformation activities to improve performance.

13. What are the benefits of using Azure Data Factory over traditional ETL tools?

Answer:

- Scalability: ADF scales automatically based on workload demands.

- Serverless: No need to manage infrastructure.

- Integration with Azure Services: Seamlessly integrates with other Azure services.

- Cost-Effective: Pay only for what you use.

14. How would you handle schema changes in source data during ETL processes?

Answer:

Use dynamic mapping or schema drift handling in ADF. Dynamic mapping adapts to changes in source schema, while schema drift handling accommodates changes during data movement.

15. How can we use Data Factory to deliver code to higher environments?

We may, in principle, do this by taking the following actions:

- Establish a feature branch to hold our code base.

- Once the code is verified for the Dev branch, create a pull request to integrate it.

- To create ARM templates, publish the developer’s code.

- Code may be promoted to higher environments, such as staging or production, by this starting an automated CI/CD DevOps pipeline.

16. In Microsoft Azure Data Factory, which three tasks can you perform?

The three tasks that Azure Data Factory facilitates are data transformation, data transfer, and control tasks.

- Data movement activities: As the name implies, they are actions that facilitate the transfer of data between locations.

- Data is copied from a source to a sink data storage, for example, using Data Factory’s Copy Activity.

- Activities related to data transformation: These assist in transforming the data when it is loaded into the target or destination.

For instance, U-SQL, Azure Functions, Stored Procedures, etc.

- Activities for controlling flow: Activities for controlling flow aid in regulating any activity that occurs in a pipeline.

For example, a wait action causes the pipeline to pause for a predetermined amount of time.

17. Which two categories of computing environments does Data Factory enable for carrying out the transform tasks?

The types of computer environments that Data Factory supports for carrying out transformation operations are listed below: –

i) On-Demand Computing Environment: ADF offers this completely managed environment. This kind of computation forms a cluster to carry out the transformation action and, upon completion of the activity, immediately deletes it.

ii) Bring Your Environment: If you already have the infrastructure in place for on-premises services, you may utilize ADF to manage your computing environment in this scenario.

18. Describe the steps that make up an ETL process.

There are four primary phases in the ETL process using Azure Data Factory:

i) Connect and Collect: Establish a connection with the data source or sources and transfer the data to crowdsourcing and local data storage.

ii) Data transformation with the use of computing services like Spark, Hadoop, HDInsight, and so on.

iii) Publish: This is the process of loading data into Azure Cosmos DB, Azure SQL databases, Azure data lakes, Azure SQL data warehouses, etc.

iv)Monitor: PowerShell, Azure Monitor logs, API, Azure Monitor, and pipeline monitoring are all integrated into Azure Data Factory.

19. Have you utilized Data Factory’s Execute Notebook activity? How can I give a notebook activity certain parameters?

To transfer code to our Databricks cluster, we may run a notebook activity. The baseParameters property allows us to pass parameters to a notebook activity. The default settings from the notebook are used if the parameters are not declared or specified in the activity.

20. What are some of the Data Factory’s helpful constructs?

- parameter: Every pipeline operation can use the parameter value that is supplied to it and executed using the @parameter construct.

- coalesce: To handle null values gracefully, we may use the @coalesce construct in the expressions.

- activity: Using the @activity construct, an activity output may be used in a later activity.

21. Is it possible to use ADF for code push and continuous integration and delivery, or CI/CD?

CI/CD of your data pipelines utilizing GitHub and Azure DevOps services is fully supported by Data Factory. This enables you to gradually create and deliver your ETL operations before releasing the final result. Once the unprocessed data has been transformed into a consumable format suitable for business use, import it into Azure Data Warehouse, Azure SQL Azure Data Lake, Azure Cosmos DB, or any other analytics engine that your company can use through its business intelligence tools.

22. When you refer to variables in the Azure Data Factory, what do you mean?

The Azure Data Factory pipeline’s variables offer the capacity to store the values. They are available inside the pipeline and are used for the same purpose as variables in any programming language.

Two operations are performed to set or modify the values of the variables: append and set variables. A data factory has two different kinds of variables: –

i) System variables: These come from the Azure pipeline and are fixed variables. For instance, the name of the trigger, the pipeline id, etc. To obtain the system data needed for your use case, you must have these.

ii) User variable: Based on your pipeline logic, a user variable is explicitly declared in your code.

23. What do data flows for mapping mean?

In Azure Data Factory, data transformations with a visual design are called mapping data flows. Without writing code, data engineers may create a graphical data transformation logic using data flows. Scaled-out Apache Spark clusters are used by Azure Data Factory pipelines to execute the resultant data flows as activities. The scheduling, control flow, and monitoring features of Azure Data Factory may be used to operationalize data flow processes.

Data flow mapping offers a fully visual experience without the need for code. Scaled-out data processing is enabled by ADF-managed execution clusters running data flows. Code translation, route optimization, and data flow task execution are all handled by Azure Data Factory.

24. In the Azure Data Factory, what is copy activity?

One of the most often utilized and well-liked actions in the Azure data factory is copying. When moving data from one data source to another, it is utilized for ETL, or lift and shift. You can do transformations on the data as you transfer it. For instance, you receive data from a TXT/CSV file that has 12 columns; however, you wish to preserve just seven columns when writing to your destination data source. It can be transformed such that the destination data source receives only the necessary columns.

25. Could you provide further details about the Copy activity?

The following actions are carried out at a high level by the copy activity:

i) Examine data

ii) Use the data to carry out the following actions:

- De- and serialization of data

- Compression and decompression

- Mapping columns

iii) Commit data to the sink or data storage at the destination. For example, Azure Data Lake

26. Is it possible to determine the value of a new column using the mapping of an existing column in ADF?

With modifications to the mapping data flow, we can create a new column with the logic we want. When building a derived column, we may either update an existing one or create a new one. In the Column textbox, type the name of the column you’re creating.

The column option allows you to override a column in your schema that already exists. To begin constructing the phrase for the derived column, click the Enter expression textbox. To construct your reasoning, you may either enter it or utilize the expression builder.

27. In the Azure Data Factory, how is the lookup activity helpful?

The Lookup action in the ADF pipeline is frequently used to seek up configurations, and the original dataset is accessible. Additionally, it transmits the data as the activity output after retrieving it from the source dataset. Typically, the search activity’s output is utilized further in the pipeline to provide any resulting configuration or make judgments.

In the ADF pipeline, lookup activity is essentially utilized for data fetching. Your pipeline logic would determine how you would use it in the fullest. Depending on your dataset or query, you may either obtain the entire collection of rows or only the first one.

28. Provide more details about Azure Data Factory’s Get Metadata activity.

Any data in the Azure Data may have its metadata retrieved using the Get Metadata activity.

A pipeline from Synapse or a factory. The metadata obtained from the Get Metadata action can be used in later activities or validated using conditional expressions.

It receives a dataset as input and outputs metadata data. As of right now, retrievable information for the following connections is supported. The metadata that is returned has a maximum size of 4 MB.

For supporting metadata that can be obtained by utilizing the Get Metadata activity, please refer to the snapshot below.

29. How may an ADF pipeline be debugged?

One of the most important parts of any coding activity is debugging, which is used to check the code for errors. Additionally, it offers a pipeline debugging option.

30. What does the ADF pipeline’s breakpoint mean?

For better understanding, let’s say you have three pipeline activities, and you wish to debug only the second activity at this point. Setting the breakpoint at the second activity will allow you to accomplish this. Click the circle that appears at the top of the activity to add a breakpoint.

31. How does the ADF Service become used?

The main function of ADF is to manage data copying between locally hosted relational and non-relational data sources in data centers or the cloud. Additionally, in order to meet business needs, you may utilize ADF Service to alter the ingested data. ADF Service is utilized as an ETL or ELT tool for data intake in the majority of Big Data applications.

32. Describe the Azure data factory’s data source.

The system that contains the data that is meant to be used or performed is known as the data source. Binary, text, CSV, JSON, picture files, audio, video, and proper databases are among the possible data types.

Azure blob storage, Azure data lakes, and any other database—Postgres, Azure SQL, MySQL, and so on—are a few examples of data sources.

Conclusion:

Hire Azure Data Factory Developers who possess a deep knowledge of these Azure Data Factory Interview Questions. They schould have a better grasp to respond to any questions with their foundations on the topic to be wiser.