It is said that the world of data today is predicated by the Extract, Transform, Load (ETL) process; i.e., this becomes the sine qua non for any modern workflow of data integration and analytics. Never before was there such a great need, considering the escalating volumes of data and complexity in business requirements, for scalable ETL solutions. Azure Data Factory is a cloud data integration service from Microsoft Azure and one of the highly effective tools for designing, scheduling, and orchestrating ETL processes. This book is meant to guide for building scalable ETL processes using Azure Data Factory, from basic concepts to best practices of the advanced level.

Understanding Scalability in ETL

Hire Azure Data Factory Developers for core of ETL processes, in essence, there is a feeling of scalability that brews in the entire organization, allowing them to, on the one hand, continue growing in volumes of data, handling spikes in workload, or adapting to new and changing business needs but without a trade-off against their performance and reliability.

In an ETL context, the ability to refer to the data pipeline from dynamic expansion to contraction would be in relation to the changes experienced in data volume, processing requirements, or user demand.

It would be an ETL solution that scales vertically in the way individual components are scaled by adding more resources and horizontally in the context of distributing workload across multiple instances or nodes.

Azure Data Factory Overview

Azure Data Factory (ADF) is a cloud-based data integration service in ETL, ELT (Extract, Load, Transform), and data warehousing type of scenarios by allowing the data engineer to create, schedule, and orchestrate method for azure data factory pipelines.

ADF provides a flexible, scalable platform for building end-to-end data integration solutions using a rich visual interface with an extensive library of data connectors and tight integration with other Azure services.

No matter whether you’re transferring data to the cloud, transforming raw data into more insightful data, or orchestrating a complex workflow, Azure Data Factory is just perfect for every case.

Designing Scalable ETL Processes in Azure Data Factory

When designing scalable ETL processes in Azure Data Factory, it’s essential to consider several key factors:

1. Data Movement Considerations: ADF provides a data movement activity like Copy Data, Data Flow, and Data Lake Storage, through which moving data from one source to another is possible.

Users can take advantage of this parallelism and partitioning to gain optimal data movement performance and scalability.

2. Data Transformation Strategies: ADF supports all forms of data transformation, such as mapping data flows, custom code running from Azure Functions or Azure HDInsight clusters, and integration with Azure Databricks to prep big data workloads meant for advanced analytics and machine learning.

In that line, it goes without saying that the scalability of data transformation is realized through the distributed tasks in a number of nodes, of course, all thanks to the serverless computing capabilities.

3. Workflow Orchestration Techniques: ADF provides data workflows whereby activities are all tied together in pipelines, thus allowing users to orchestrate quite complex data workflows.

Triggers, scheduling, and event-driven execution mechanisms are provided that help users automate the execution of ETL Tools processes. This allows the scaling of resources dynamically according to workload and demand.

4. Best practices in Monitoring and Management: ADF built-in monitoring and management capabilities include integration with Azure Monitor, diagnostic logs, and dashboards of performance and health metrics with cost tracking for users’ data pipelines.

The system should have proactive monitoring and alerting mechanisms for users and systems to enable them to discover and fix any scaling issues that might arise before they impact the business.

Architectural Patterns for Scalable ETL in Azure Data Factory

Below are some of the architectural patterns that Azure Data Factory provides for an organization to implement in order to come up with scalable ETL solutions:

1. Batch Processing Architecture: Of these batch architectural styles, this one best aligns with a scenario where processing data is done in bulk at certain designated times, such as nightly data warehouse loads or monthly financial reporting.

It is batch capable for parallel processing of large data volumes using ADF, and therefore allows provision in timely and accurate insight delivery.

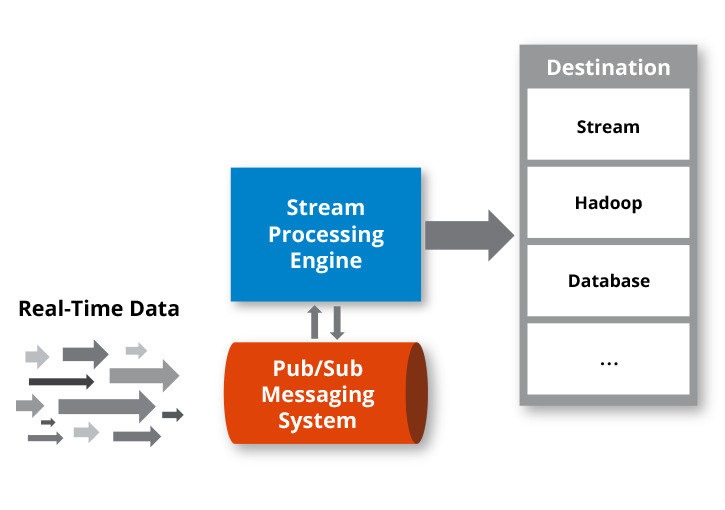

2. Real-time Processing Architecture: The real-time analytics, or event-driven processing, or data ingestion from IoT (Internet of Things) environment, data architecture can best be chosen in cases the organization requires an environment of instant data processing.

ADF comes with a built-in support for event-based triggers, streaming data sources, and movement activities that consume real-time data, and thus the users analyze and act on the data as it arrives, ensuring faster organizational decision-making and response time.

3. Hybrid Processing Architecture: In case the data processing needs of any organization are of a hybrid nature, then strongly recommend that they go for an architecture of hybrid processing. Batch processing and real-time processing, in this case, seem to be complementary when there are varied processing modes to serve a wide variety of data sources with diverse processing frequencies and workload patterns.

Thus, an organization can design hybrid designs as per its own requirements, ensuring efficiency and optimized performance along with the scalability and flexibility offered in ADF.

Implementing Scalable ETL Processes in Azure Data Factory

Implementing scalable ETL processes in Azure Data Factory involves several key steps:

1. Setting up Azure Data Factory: Start with creating an instance of a Data Factory from the Azure portal and thereafter set up linked services that will help in connecting the data source to the destination.

This would predominantly mean defining the connection strings, the methods of authentications, and access controls, among others, so that there is uniform data flow.

2. Design the Data Pipelines in a Visual Way: Using the visual interface provided by ADF, design a data pipeline that orchestrates moving data from the source to the destination location, defining activities, dependencies, and triggers required to automatically execute an ETL process.

.png)

3. Configuring Data Movement and Transformation: Activities of data movement and transformation can be described within the data pipeline in a way that the needed manipulation, enrichment, or cleansing of a data flow is achieved.

These could range from defining mappings, transformations, and data validation rules to make sure that the data quality, along with data integrity, is maintained.

4. Scaling Resources to Optimize their Performance: Scale resources like Azure Integration Runtimes, compute instances, and storage accounts properly in order to attain optimum performance and scalability for all the ETL processes. This may include settings around the resource, obtaining more capacity, or taking advantage of serverless compute options to meet variable levels of workload.

Performance Optimization Strategies

As a performance optimization is required with reference to having ETL processes work optimally and meeting business requirements, we in this section would refer to major strategies and techniques for performance optimization within the Azure Data Factory.

Table: Performance Optimization Techniques

| Technique | Description |

| Parallel Processing | Distribute processing tasks across multiple nodes or compute instances to run data pipelines in parallel, reducing overall processing time. |

| Data Partitioning | Partition data into smaller chunks based on key attributes or criteria to enable parallel processing and optimize resource utilization. |

| Indexing and Caching | Create indexes on frequently queried columns and cache frequently accessed data in memory or storage to improve data retrieval performance. |

| Query Optimization | Optimize data queries and transformations to minimize resource consumption and reduce query execution time, improving overall pipeline performance. |

| Resource Scaling | Scale resources, such as compute instances and storage accounts, dynamically based on workload demands to ensure consistent performance and optimal resource utilization. |

Case Studies: Real-world Examples of Scalable ETL with Azure Data Factory

1. Case Study: Retail Industry

The large majority of e-commerce platforms in the retail industry, Azure Data Factory, manage surges of customer transaction data coming from peak holiday shopping seasons.

While a purely real-time processing architecture may strain under heavy loads in the absence of dedicated scalability considerations, a hybrid processing architecture—with the batch processing updating the regular data and the real-time processing allowing for instant order notifications—keeps the data processing pipeline of the platform responsive and efficient.

2. Case Study: Healthcare Industry

This organization is basically an Azure Data Factory, where the data integration processes are centralized over different healthcare systems and electronic health record (EHR) databases.

With a batch processing architecture in place and the use of ADF abilities, it will hence guarantee validation and error checks where the issue is found, and as such the organization will work through high volumes of patient data per regulatory requirement and industry best practice.

3. Case Study: Financial Services Industry

The other one is the biggest bank in the financial services sector, which uses Azure Data Factory to centralize, in all its operations, the management of data and support to the process of decision making.

With real-time processing capabilities and an integration with Azure Synapse Analytics for advanced analytics and reporting, the bank will conveniently tap into their transaction data in something that approximates real time for fraud detection, risk management, and personalized customer experiences.

Best Practices for Scaling ETL Processes with Azure Data Factory

1. Design Principles for Scalability: In the development of data pipelines and their related elements, follow modular design principles that would allow independent reuse and both horizontal and vertical scaling as needed.

2. Performance Tuning Techniques: Tune the partitioning, indexing, and caching settings, respectively, to enable the data processing resources to achieve maximum throughput and thus, reduce latency to the lowest possible.

3. Error Handling and Fault Tolerance: Strong error mechanisms through the use of retry policies, checkpoints, data validation rules, among others, so that even in cases of failure or hitch, integrity, and reliability of data are guaranteed.

4. Security Consideration: Allows the use of standard built-in security attributes from ADF to protect sensitive information and secure data pipelines with no further access by inappropriate security principals or permissions to prevent unauthorized access or data breaches.

FAQ’s

-

How does Azure Data Factory handle huge data volume?

Azure Data Factory uses distributed processing and parallel execution to handle voluminous data so that the maximum capacity can be achieved. This gets used by resources like Azure Integration Runtimes and Partition of Data to throttle maximum throughput and efficiency in the least time of processing.

-

What are the cost implications of scaling ETL processes in Azure Data Factory?

When ETL processes scale inside Azure Data Factory, that is when costs come in, by means of the adopted resources, volume of data, and Azure services during the scaling process.

Organizations need to optimize the usage of resources, including adopting cost-effective pricing models and paying critical attention to usage, for purposes of cost control and ensuring service performance is enhanced at reasonable scalability.

-

Can the Azure Data Factory integrate with other Azure Services to scale up the resources at the time of need?

Yes. In Azure Data Factory, the service can be integrated with other services in Azure, like Azure Storage, Azure SQL Database, Azure Synapse Analytics, and Azure Databricks, for additional functionality in scaling resources, optimizing performance, and doing advanced analytics.

-

How do I monitor and troubleshoot my Azure Data Factory performance?

Azure Data Factory comes with integrated monitoring and troubleshooting capabilities. This will be integration into the Azure Monitor, diagnostic logs, and performance metrics dashboards that track the performance, health, and cost of your data pipelines.

You need to proactively look at key metrics, set up alerts on such metrics, and analyze their performance trend to be able to pick up the scalability issue in time and resolve it.

Conclusion

To sum up, it establishes the potential possibility of building scalable ETL processes with the use of Azure Data Factory. It also gives organizations a powerful solution for leveraging their data integration needs amidst the fast-growing and expanding business world.

This article explores the principle of scalability, primary architectural patterns, and best practices to leverage and empower the organizations. This is done to design and deploy a robust, high-performance, and scalable ETL solution, deriving the outcome that fosters innovation and drives business.